Is AI Making Programming Harder? Why "Test Only, Zero Code Review" is an Absolute Disaster

Posted on 週六 21 二月 2026 in Blog

Recently, an article titled "AI Fatigue is Real" struck a massive chord, perfectly echoing what many developers are feeling right now: programming in the AI era has actually become more exhausting.

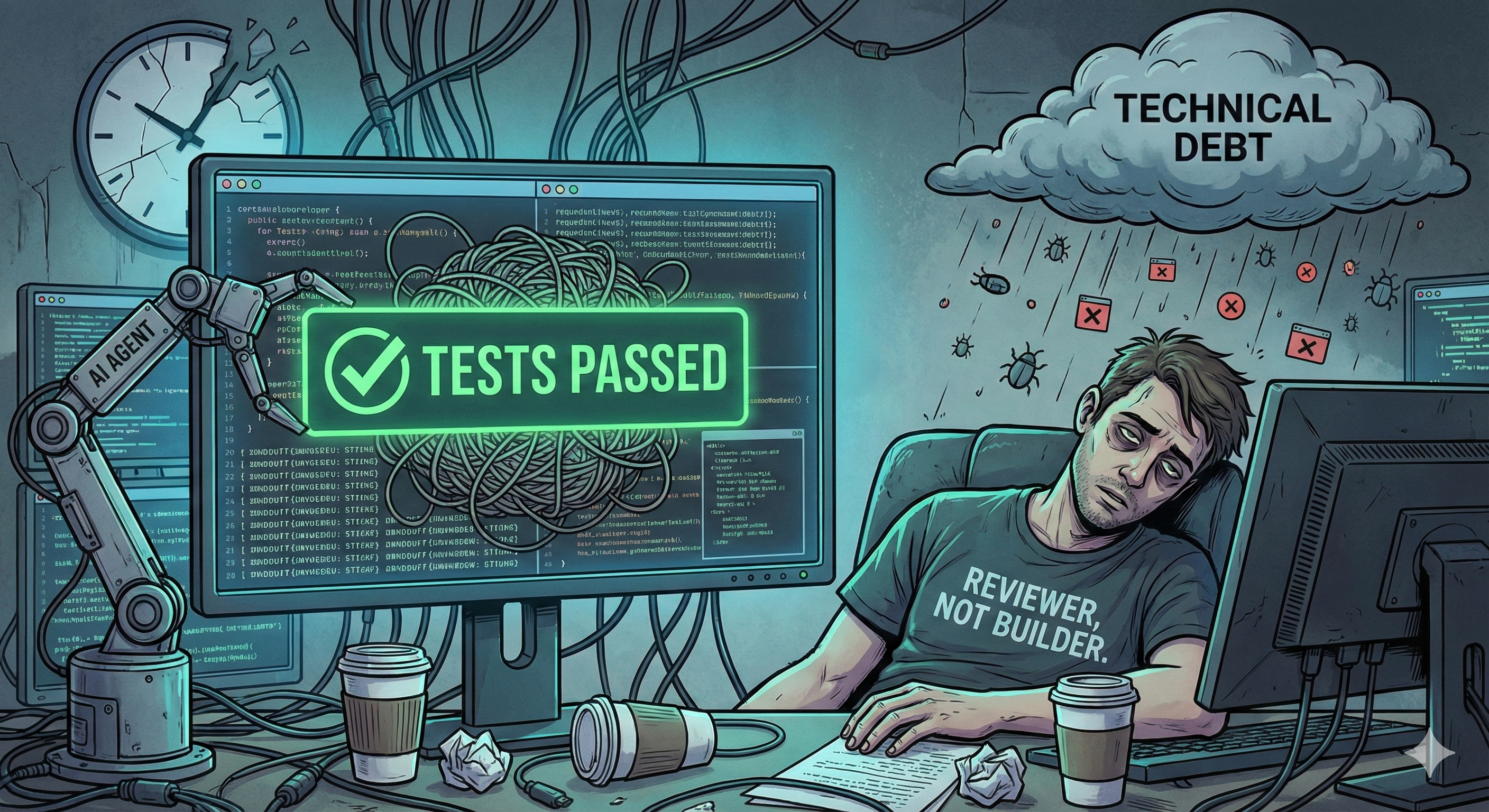

Because we’ve transitioned from being Creators (Builders) to Reviewers. AI generates code so fast and inconsistently that the burden of reviewing has become overwhelmingly heavy: first, it's impossible to enter a flow state; second, you are constantly context-switching; third, this kind of high-density micro-reviewing is incredibly draining and quickly pushes you into "decision fatigue."

Recently, while promoting my open-source project, the fastfilelink CLI (ffl), across various communities, I’ve been lurking and observing mainstream developer discussions. What I saw was endless anxiety, boundless hype, and a flood of "AI will replace developers" methodologies, all accompanied by massive amounts of poorly crafted code.

Some of it makes sense, but a huge portion of it is, frankly speaking, utter bullshit.

To combat this fatigue, a new, incredibly sweet-sounding proposition has emerged in the community (especially driven by the current OpenClaw-dominated faction):

"Don’t review code; review output / tests passed."

Some people even use compilers as an analogy, arguing that we don't review the assembly generated by a compiler today either. Personally, I think this argument is complete dogshit. The reason is simple:

1. LLMs are not Compilers; they lack "Determinism"

A compiler uses strict syntax for deterministic translation, but an LLM is a probabilistic model. When you ask an LLM to generate test cases, sometimes the tests themselves are fake or of terrible quality. Even if all the tests show green, it doesn't mean the system is fine. You ultimately have to review these test cases, so the premise of "not reviewing at all" is fundamentally unreliable.

2. Lack of Global Vision leads to the collapse of the Single Source of Truth

Constrained by the extreme limits of context windows and RAG capabilities, LLMs can only see fragments of a project; they cannot comprehend the system as a whole. This results in generated code packed with extreme redundancy, completely violating the DRY (Don't Repeat Yourself) principle. Once requirements change, it's incredibly prone to producing a massive amount of inconsistent bugs.

3. The Context Window Limit is a short-term unsolvable fatal flaw

Stop fantasizing that "it'll be fine once the models get stronger." Bound by the physical limits of training data and attention mechanisms, context windows will always have a ceiling (not to mention that no matter how big the context window gets, projects will only get bigger). Therefore, the architectural collapse mentioned in Point 2 is practically unsolvable in the foreseeable future.

4. Trapped in a "Chicken-and-Egg" infinite loop

To prevent those weird, unpatched, and inconsistent edge-case bugs from Point 2, your Test Coverage must approach 100%, and the tests must be designed with extreme rigor. This brings us right back to Point 1: Test Cases are themselves Code, heavily copy/pasted by the LLM.

If you want to save yourself the effort of reviewing Production Code, you must spend equal (or even more) effort reviewing a massive pile of Test Code. You are merely trading one hell for another.

The Cruel Reality: This is a game of "Compute Capitalism"

Therefore, the methodology of only caring if test cases pass while ignoring the code has fundamental flaws. It's more of an AI hype—it sounds awesome, but in practice, it’s riddled with issues. You're just swapping one problem for another.

Of course, you might argue: "But it seems to work! As long as we only care about tests/outputs, if we give the LLM enough time and tokens to fix it, it can eventually pass the tests."

Oh, yeah, sure! Because as the project grows larger and gets filled with more and more repetitive logic created by the LLM, you can indeed just throw money at it and let it slowly fix things. But the key points are:

- Not reviewing is impossible: You’ve just shifted the target of your review from Production Code to Test Cases. That is still Code, and it still drains your brainpower.

- Maintenance costs compound exponentially: Once technical debt stacks up, fixing even a minor bug will cost increasingly more tokens and time.

People are lazy. Who wouldn't want to dump all their work onto AI? (Especially for the vast majority of developers who don't even enjoy coding that much—to them, it's just a job). So, this narrative that "AI can do everything for you" is guaranteed to excite people, while simultaneously peddling anxiety.

But who is the ultimate beneficiary? It's the AI development tool vendors. The more bloated the code is, and the more times you need to debug, the more money they make. The cruel truth they don’t want you to know is that the oldest software engineering adage still holds true: "No Silver Bullet."